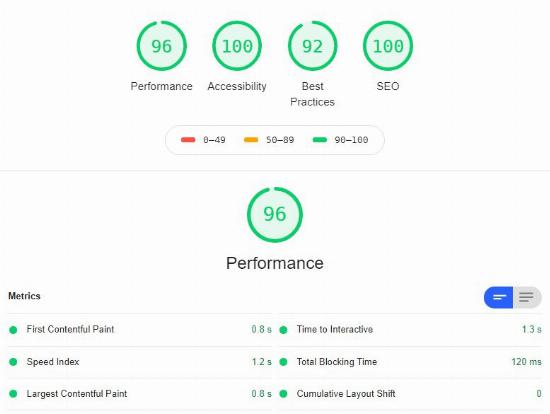

Web Apps Actualized got a 96 Google Lighthouse Performance Score

Check it Out

It's very hard to do and here is how we did it. A score of 90 represents the top 5% of websites. Our score is consistently 96.

Before we Start: Why do we Care?

Well, first off, it's a matter of pride as a software engineer. We are telling people that we are going to charge them money for their website, and in doing so we must ensure that our own site is a model of perfection. Secondly, the lighthouse score affects things like search results, and user experience. Not directly... it isn't as if a great performance score guarantees search results, but it does indicate that our page would qualify strongly in search results in categories such as load time and performance.

So, what was the problem?

After we finished our initial publication of our application, we ran the performance audit and got a 41. That's right! A flunking, terrible 41. It was heart-sinking. The application loaded almost instantly, and still our score was 41. To bring it up, we had to go through several steps: Culling out unnecessary JavaScript, Lazy Loading JavaScript, implement modern compression, pre-rendering html files, and finally redesign the view-change system in order to ship the bare minimum HTML at initial load.

JavaScript

The first step we went through to get our load time down was to optimize our javascript that we were shipping across the wire. We took out all of the unimportant lines that were being shipped along with our framework, and then refactored the design of some of the core components so as to make them smaller and to have less dependencies, allowing us to cull off even more unneeded JavaScript. The fact that we are the curators of our framework greatly aided us in this process. The fact that we created our framework allows us to change it as needed, down to every line of code: real control.

Lazy-Loading

Lazy loading is the act of waiting to load resources until the moment they are needed. Implementing lazy loading was not hard in this particular application, because we already had a JavaScript closure active, that gets accessed upon section change. We just moved the section-specific javaScript into individual files, and then put an asynchronous call for a script in the function that changes section. As far as when a section was loaded directly, we already had a publishing pipeline in place, so we just added the individual files to the spot where we were constructing the head section of the index html files for the specific section.

Modern Compression

To get the best of scores on the audit, we had to implement several forms of compression. The first line of compression being what is called minification. Minification is when you remove all of the unnecessary characters from a computer programming file. This makes the file size small, so that there is less data to be sent over the internet. The minifier we used was a combination of of two free compilers off the internet, combined with some edits we made ourselves.

The next kind of compression we implemented was Brotli compression. Brotli compression is a type of compression that you can apply to text files to make the size smaller for transfer. To implement it, we downloaded and compiled a free version of the google open-source Brotli Compressor and installed it on our system, and then used it to render brotli-compressed files along side of each of our section-html files.

The last form of compression we implemented was the webp image format. Webp is an image format that is considerably more efficient than png or jpg images. To do this, we downloaded and compiled a webp library from git hub, and then inserted calls to the executable into our publishing pipeline.

The brotli and the webp formats are not universally supported across all browsers, so we had to implement HTACCESS files that would detect support using the request headers, and route the requests accordingly.

Pre-Rendering HTML Files

One of the aspects of your file load that affects your score is TTFB (Time To First Byte). That's a measure of the time that your server takes to calculate the file it is going to respond with. By pre-rendering the html, instead of calculating it on the server, we have optimized our time to first byte. There is a downside to this: we end up with redundant information on the server. But that is ok in this application, because even with extra data storage the total size of our site is still relatively small.

Redesigning The View Change Engine

Even after we got to end of all of that, we were still scoring between 81 and 90. That's a 'B'... but it's not an 'A'. We needed an A. Our results indicated that our issue we still had was with rendering time. There was a couple of factors that contributed to this. First was Layout thrashing. We redesigned the engine to remove the thrashing.

Second, we were shipping too much HTML. We were shipping almost all of the sections in HTML, contained in different elements, and then using javaScript to change a switch that would display the appropriate element. This had a rendering cost, even though most of the elements were not visible, and also made the page run slow on slower devices. To fix this, we created a system where there are two elements that are switched between, and the HTML is shipped as data in a javaScript object. As the sections change, javaScript populates the off-element with the HTML from the data object, and then a series of style changes fires the section transitions. Oddly, many of our applications already behave this way, and we were trying a new idea out in shipping so much HTML.

This was the final step in our journey to an 'A' score on the Google Lighthouse performance audit.

Conclusion

We believe that all this work was totally worth it. Our site loads lightning fast, even with a slow connection. This will help our ranking in search engines and improve our user experiences: and when it comes down to it, isn't that the goal of our website?

Do you need to improve your score? These step will help it, and I hope it helped. If you need to hire someone to do it for you, give Web Apps Actualized a call!

This blog application was produced and is managed by Web Apps Actualized

Need a website? Or how about a web system for your business? Contact us and tell us about your idea, so we can start making your dream a reality.

Here is our main site.

Programs and Resources Used

WebDev Suite (in house) - Microsoft Paint - Microsoft Paint 3d - Apache Open Office Draw - Microsoft Windows - Guitar Pro 6 - Stack Overflow - W3Schools - PHP.net - Web Browsers (Mostly Chrome) - Apache XAMPP - Git - Cmake - Brotli Compression - JShrink Minifier Combined with Rodrigo54 Minifier - cwebp